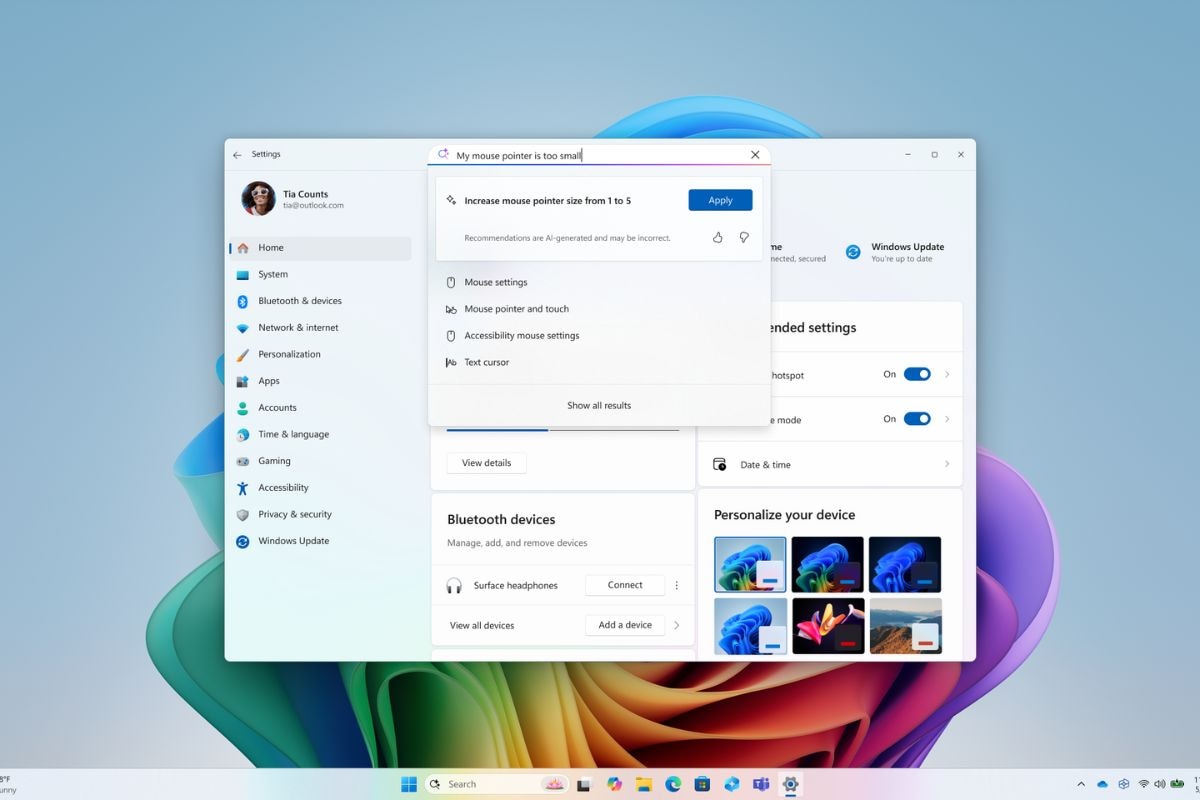

Microsoft has introduced a new Artificial Intelligence (AI) model that can run locally on a device. Last week, the Redmond-based tech veteran released the new Windows 11 features in Beta, of which the settings had new AI agent features. This feature allows users to explain what they want to do in the Settings menu, and AI agents use either to navigate on the option or to take autonomy. The company has now confirmed that the feature is operated by the Mu Small Language Model (SLM).

Microsoft’s Mu AI Model Power Agent in Windows Settings

One in blog postTech veteran expanded its new AI model. It is currently deployed fully on-device in Sangat Copilot+ PCS, and it runs on the device’s nerve processing unit (NPU). Microsoft has worked on the adaptation and delay of the model and claimed that it “reacts to more than 100 tokens per second to the” Settings scenario demanding UX requirements demanding UX requirements of the agent. “

The MU is built on a transformer-based encoder-dicoder architecture, characterized by 330 million token parameters, which makes SLM a good fit for small-scale deployment. In such architecture, the encoder converts the first input into a well-fixed-lambai representation, which is then analyzed by the decoder, which also produces outputs.

Microsoft Said that this architecture was preferred due to high efficiency and adaptation, which is necessary when working with limited computational bandwidth. To combine it with NPU sanctions, the company also selected for layer dimensions and customized parameter distribution between the encoder and decoder.

Distilled, Mu from the company’s Phi model was trained using the A100 GPU on Azure Machine Learning. Typically, distilled models display higher efficiency than the original model. Microsoft improved its efficiency by connecting models with task-specific data and by fine-tuning through low-range adaptation (LERA) methods. Interestingly, the company claims that the MU performs at the same level despite being one-tenth size at the same level as the PHI-3.5-Min.

MU adaptation for Windows Settings

The tech giant also had to solve another problem, before the model AI agents could power the settings – to be able to handle input and output tokens to change hundreds of system settings. This requires not only a huge knowledge network, but also less delay to complete the tasks almost immediately.

Therefore, Microsoft scored its training data extensively, going from 50 settings to hundreds, and techniques such as synthetic labeling and noise injections were used to teach AI how people do phrases. After training with more than 3.6 million examples, the model became quite fast and accurate to respond in less than half a second, the company claimed.

An important challenge was that the MU performed better with multi-word query on small or vague phrases. For example, typing “lower screen brightness at night” gives it more reference to typing “brightness” only. To solve this, Microsoft continues to show traditional keyword-based search results when a querry is very unclear.

Microsoft also saw a language-based difference. In such examples when a setting may apply to more than one functionality (for example, the “increased shine” may refer to the screen or external monitor of the device). To address this difference, the AI model focuses on the current settings currently the most commonly used. This is something that the tech veterans continue to refine.